Temporal-Difference Learning

TD learning is a combination of Monte Carlo ideas and dynamic programming (DP) ideas. Like Monte Carlo methods, TD methods can learn directly from raw experience without a model of the environment’s dynamics. Like DP, TD methods update estimates based in part on other learned estimates, without waiting for an outcome (they bootstrap).

TD Prediction

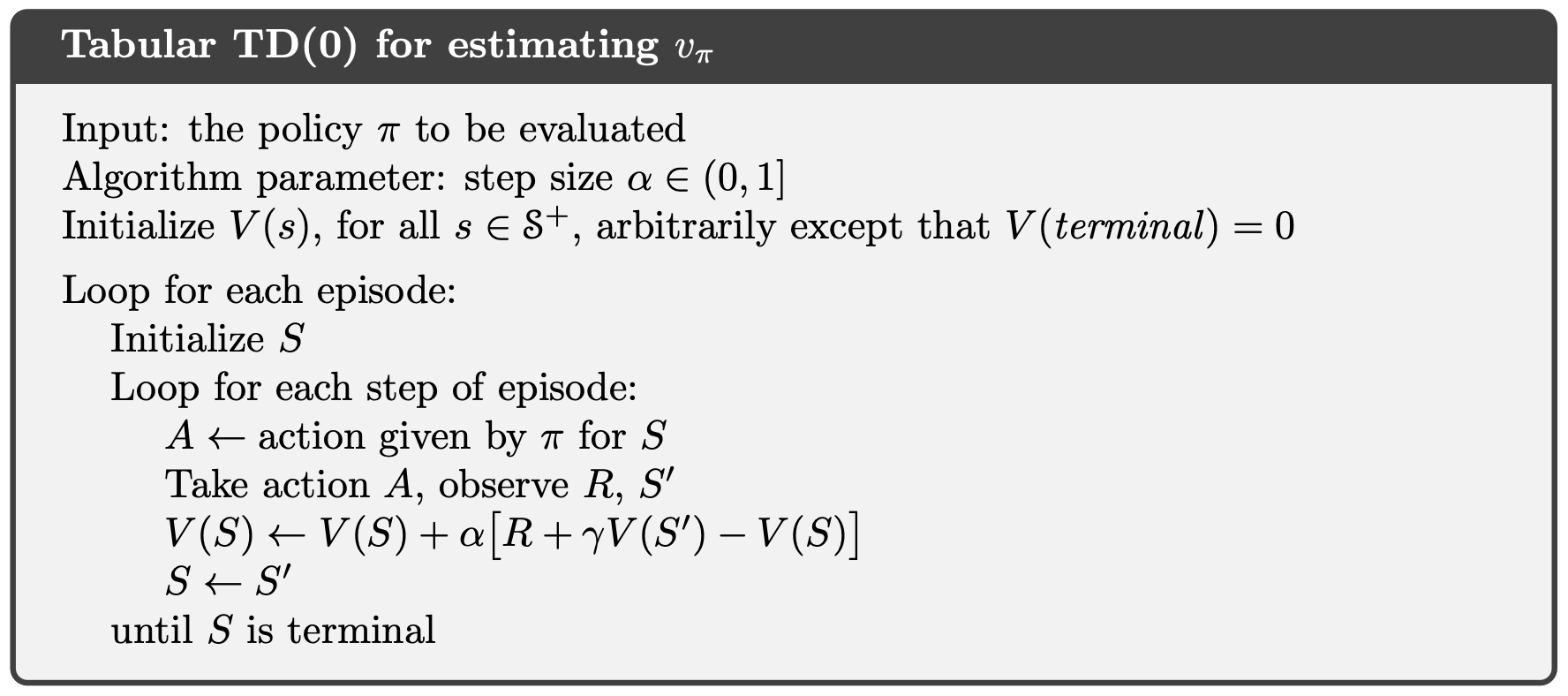

Whereas Monte Carlo methods must wait until the end of the episode to determine the increment to (only then is known), TD methods need to wait only until the next time step. At time they immediately form a target and make a useful update using the observed reward and the estimate . The simplest TD method makes the update

immediately on transition to and receiving . In effect, the target for the Monte Carlo update is , whereas the target for the TD update is . This TD method is called TD , or one-step , because it is a special case of the .

Finally, note that the quantity in brackets in the update is a sort of error, measuring the difference between the estimated value of and the better estimate . This quantity, called the TD error, arises in various forms throughout reinforcement learning:

Notice that the TD error at each time is the error in the estimate made at that time. Because the TD error depends on the next state and next reward, it is not actually available until one time-step later. That is, is the error in , available at time . Also note that if the array does not change during the episode (as it does not in Monte Carlo methods), then the Monte Carlo error can be written as a sum of TD errors:

This identity is not exact if is updated during the episode (as it is in ), but if the step size is small then it may still hold approximately. Generalizations of this identity play an important role in the theory and algorithms of temporal-difference learning.

Advantages of TD Prediction Methods

- TD methods generally converge faster than MC methods, although this has not been formally proven.

- TD methods do converge on the value function with a sufficiently small step size parameter, or with a decreasing step size.

- They are extremely useful for continuing tasks that cannot be broken down in episodes as required by MC methods.

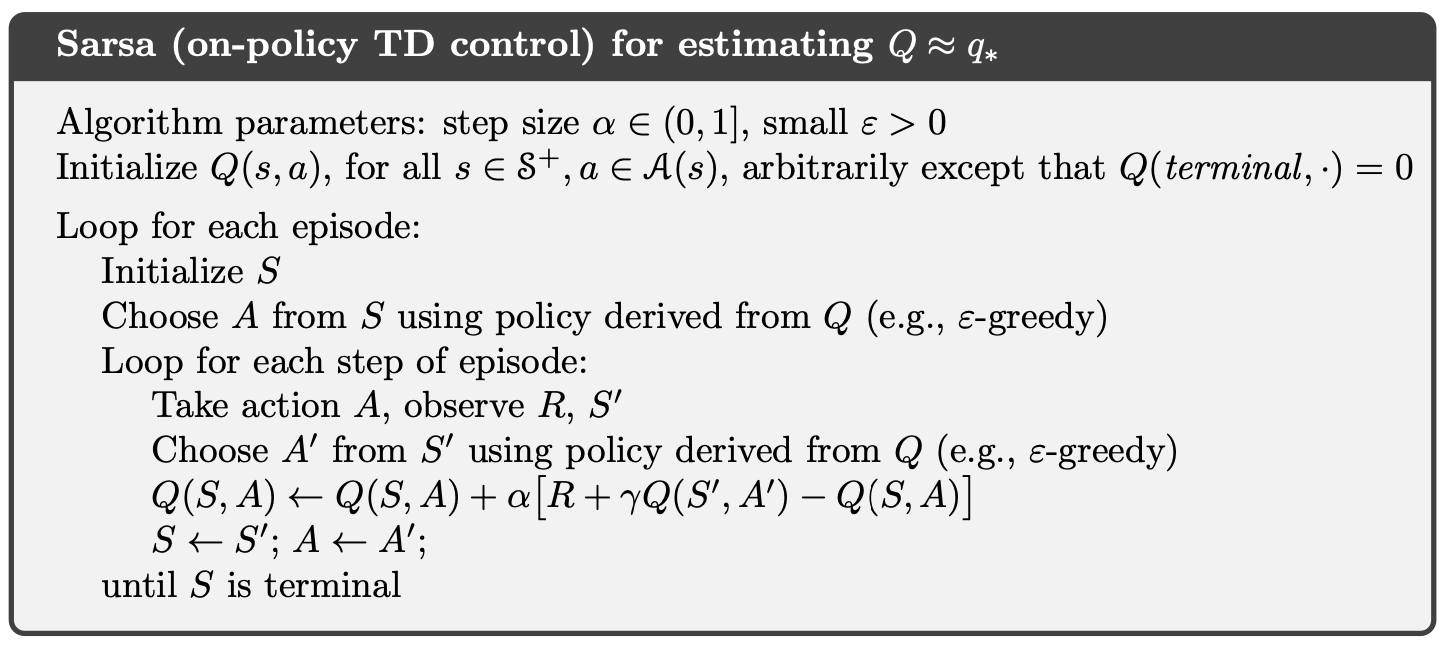

Sarsa: On-policy TD Control

In the previous section, we considered transitions from state to state and learned the values of states. Now we consider transitions from state-action pair to state-action pair and learn the values of state-action pairs. Formally these cases are identical: they are both Markov chains with a reward process. The theorems assuring the convergence of state values under also apply to the corresponding algorithm for action values:

This update is done after every transition from a nonterminal state . If is terminal, then is defined as zero. This rule uses every element of the quintuple of events, , that make up a transition from one state-action pair to the next. This quintuple gives rise to the name Sarsa for the algorithm.

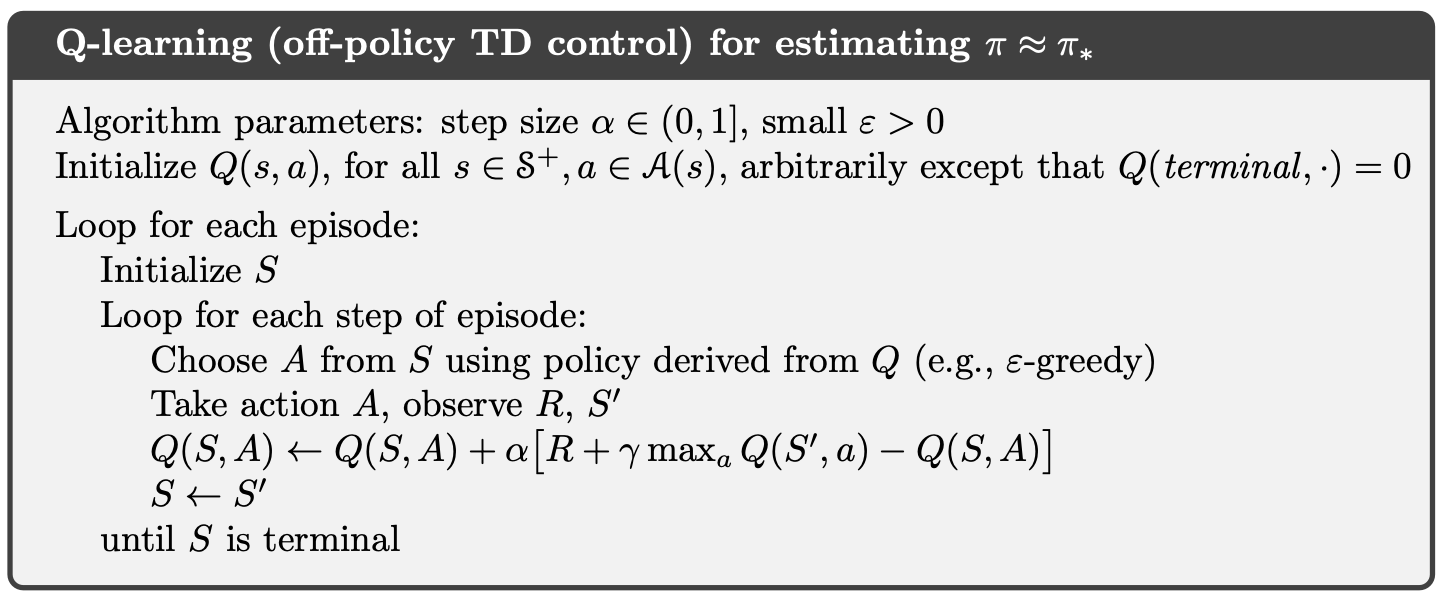

Q-learning: Off-policy TD Control

One of the early breakthroughs in reinforcement learning was the development of an off-policy TD control algorithm known as Q-learning (Watkins, 1989), defined by

In this case, the learned action-value function, , directly approximates , the optimal action-value function, independent of the policy being followed. This dramatically simplifies the analysis of the algorithm and enabled early convergence proofs. The policy still has an effect in that it determines which state-action pairs are visited and updated. However, all that is required for correct convergence is that all pairs continue to be updated.

Concurrent Q-Learning

- Check out Concurrent Q-Learning here.

Expected Sarsa

Instead of updating our value function with the value maximizing action at (as is the case with Q-learning) or with the action prescribes by our -greedy policy (as is the case with SARSA), we could make updates based on the expected value of at . This is the premise of expected sarsa. Doing so reduces the variance induced by selecting random actions according to an -greedy policy. Its update is described by:

We can adapt expected SARSA to be off-policy by making our target policy greedy, in which case expected SARSA becomes Q-learning. It is therefore seen as a generalization of Q-learning that reliably improves over Sarsa.